24 Nov 2021

Using AWS to analyse Elephant Rumbles

Benjamin Ellis - AIMS CDT Student

As part of my second mini project in the AIMS CDT, I investigated the communication of African Elephants. This work was supervised by Andrew Markham and Beth Mortimer.

Elephants generate a wide range of vocalisations to communicate with one another, such as snorts, trumpets and infrasonic rumbles. Since these rumbles are so low frequency, they cannot be heard by the human ear, but can be detected either by microphones or seismometers. Some previous field work [1] had collected a large array of both seismic and microphone data over a 3-week deployment. The purpose of my project was to build a pipeline to cluster the data and try to derive some insight from that about elephant communication.

I decided to analyse the seismic data for this purpose because rumbles are very clearly visible there. However, even loading the data is quite a problem because there are 135 GB of these rumbles. Computing spectrograms on this much data is quite computationally intensive. Additionally, GPUs are required for running the neural networks in the detector, which detects elephant rumbles, and the clusterer, which clusters the resulting rumbles. I used the GPUs on AWS EC2 g4dn.4xlarge instances to train these networks. I also performed much of the code development on AWS because it was infeasible to run the loader, detector or clusterer locally. By caching the results of subsequent spectrogram computations, and using multiple loading threads, I was able to load this data relatively efficiently, reducing the time per epoch from over 100 hours to 22 minutes. I used contrastive predictive coding [2] to cluster the rumbles.

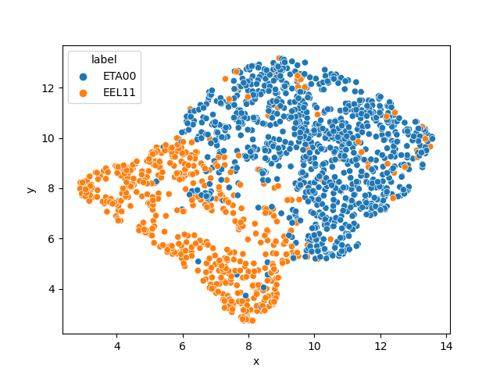

Some example clusters for the elephant data are shown below. In the data used here, the rumbles were detected by hand. There are two clear clusters here which correspond to two different seismic stations. This suggests that CPC is separating the two based on some properties of the background noise, or different set-up of the seismometer, rather than some aspect of elephant communication.

This could possibly be addressed by looking at the rumbles that were detected on multiple microphones and trying to predict the same rumble on another microphone. Tighter cropping of the rumbles would also possibly help.

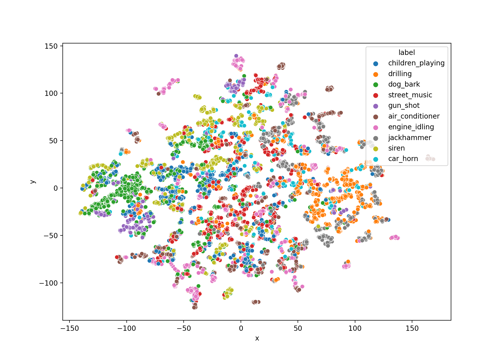

We also tested clustering on some urban noises. The below image shows the clusters generated for the UrbanSound8K dataset.

You can see that while some of the classes, such as dog barks and gun shots, are well separated, others, such as air conditioners, are not convincingly clustered at all. In general the method seems better at clustering shorter sounds. This might be because it uses a fixed look-ahead parameter, which could be adjusted based on the input length.

Although the rumbles did not cluster in the way that was expected, the pipeline to load the data will be reusable in the future, and the clustering method demonstrated that it could easily separate the data. These will both be useful in future work to gain more insight into elephant communication.

[1] Michael Reinwald, Ben Moseley, Alexandre Szenicer, Tarje Nissen-Meyer, Sandy Oduor, Fritz Vollrath, Andrew Markham and Beth Mortimer. 2021 Seismic localization of elephant rumbles as a monitoring approach. J. R. Soc. Interface. 18. 20210264. http://doi.org/10.1098/rsif.2021.0264

[2] Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding, 2019.