13 Aug 2025

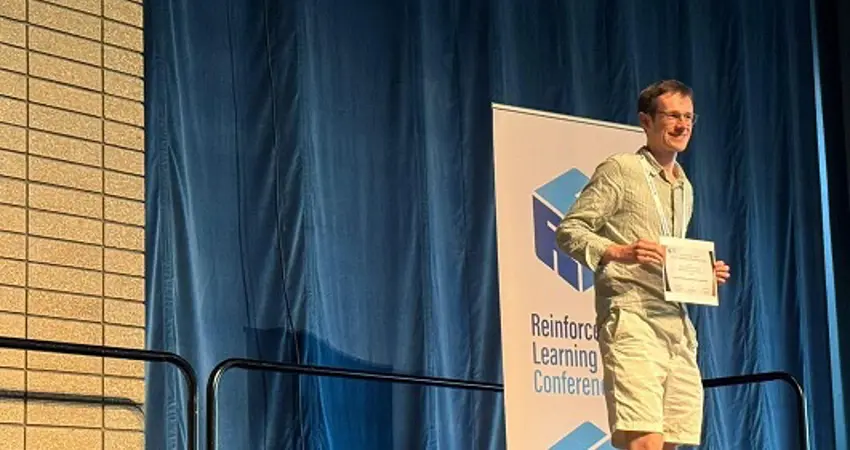

Alex Goldie receives ‘Outstanding Paper for Scientific Understanding in Reinforcement Learning’ at RLC 2025, in Alberta

Alex Goldie (AIMS CDT) receives the award for ‘Outstanding Paper for Scientific Understanding in Reinforcement Learning’ at RLC 2025, in Alberta.

The paper is titled ’How Should We Meta-Learn Reinforcement Learning Algorithms?’.

The conference organisers said the following:

This paper provides a crucial empirical study that significantly advances the scientific understanding of how to meta-learn reinforcement learning (RL) algorithms. It directly compares several meta-learning approaches—including black-box learning, distillation, and LLM proposals—and offers a clear analysis of their trade-offs in terms of performance, sample cost, and interpretability. The findings provide actionable recommendations to help researchers design more efficient and effective approaches for meta-learning RL algorithms.

At a high level, our goal was to explore the best way to discover algorithms from data rather than by hand. There are a range of meta-learning algorithms people have proposed in the past, such as evolving neural networks or asking LLMs to refine and rewrite new algorithm functions.

However, there has been little direct comparison of these various methods in prior literature. Similarly, there are a lot of papers in which people produce learned algorithms in reinforcement learning, but they rarely consider how they should learn the algorithm, instead focusing on what they should learn, such as an optimiser or loss function for reinforcement learning. In this work, we attempt to unify these lines of research by directly comparing the different learning algorithms for a set of learned algorithms. Our comparison focuses on a number of characteristics of the different learning algorithms, such as their performance, sample cost and interpretability given certain characteristics of learned algorithms.

As a result, we produce a set of recommendations for researchers working in this space.

Abstract: The process of meta-learning algorithms from data, instead of relying on manual design, is growing in popularity as a paradigm for improving the performance of machine learning systems. Meta-learning shows particular promise for reinforcement learning (RL), where algorithms are often adapted from supervised or unsupervised learning despite their suboptimality for RL. However, until now there has been a severe lack of comparison between different meta-learning algorithms, such as using evolution to optimise over black-box functions or LLMs to propose code. In this paper, we carry out this empirical comparison of the different approaches when applied to a range of meta-learned algorithms which target different parts of the RL pipeline. In addition to meta-train and meta-test performance, we also investigate factors including the interpretability, sample cost and train time for each meta-learning algorithm. Based on these findings, we propose several guidelines for meta-learning new RL algorithms which will help ensure that future learned algorithms are as performant as possible.

Alex is supervised by Jakob Foerster and Simon Whiteson.

Link to the paper here: https://arxiv.org/pdf/2507.17668

Twitter: @AlexDGoldie

Email: goldie@robots.ox.ac.uk